4. Data¶

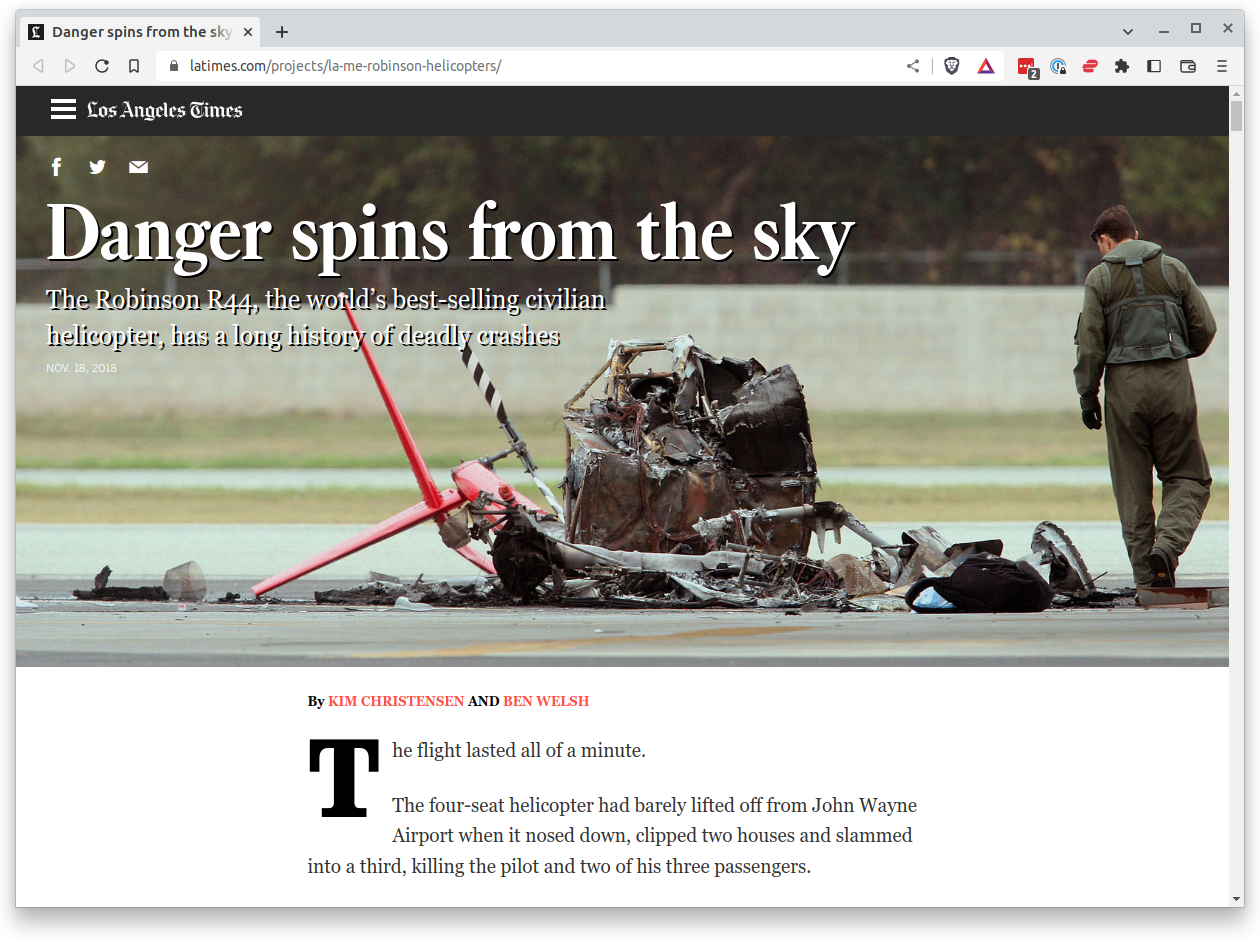

In 2018, the Los Angeles Times published an investigation headlined, “The Robinson R44, the world’s best-selling civilian helicopter, has a long history of deadly crashes.”

It reported that Robinson’s R44 led all major models with the highest fatal accident rate from 2006 to 2016. The analysis was published on GitHub as a series of Jupyter notebooks.

The findings were drawn from two key datasets:

The National Transportation Safety Board’s Aviation Accident Database

The Federal Aviation Administration’s General Aviation and Part 135 Activity Survey

After a significant amount of work gathering and cleaning the source data, the number of accidents for each helicopter model were normalized using the flight hour estimates in the survey. For the purposes of this demonstration, we will read in tidied versions of each file that are ready for analysis.

The data are structured in rows of comma-separated values. This is known as a CSV file. It is the most common way you will find data published online. The pandas library is able to read in files from a variety formats, including CSV.

Show code cell content

import pandas as pd

4.1. The read_csv method¶

Scroll down to the first open cell. There we will import the first CSV file using the read_csv function included with pandas.

pd.read_csv("https://raw.githubusercontent.com/palewire/first-python-notebook/main/docs/src/_static/ntsb-accidents.csv")

| event_id | ntsb_make | ntsb_model | ntsb_number | year | date | city | state | country | total_fatalities | latimes_make | latimes_model | latimes_make_and_model | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 20061222X01838 | BELL | 407 | NYC07FA048 | 2006 | 12/14/2006 00:00:00 | DAGSBORO | DE | USA | 2 | BELL | 407 | BELL 407 |

| 1 | 20060817X01187 | ROBINSON | R22 BETA | LAX06LA257 | 2006 | 08/10/2006 00:00:00 | TUCSON | AZ | USA | 1 | ROBINSON | R22 | ROBINSON R22 |

| 2 | 20060111X00044 | ROBINSON | R44 | MIA06FA039 | 2006 | 01/01/2006 00:00:00 | GRAND RIDGE | FL | USA | 3 | ROBINSON | R44 | ROBINSON R44 |

| 3 | 20060419X00461 | ROBINSON | R44 II | DFW06FA102 | 2006 | 04/13/2006 00:00:00 | FREDERICKSBURG | TX | USA | 2 | ROBINSON | R44 | ROBINSON R44 |

| 4 | 20060208X00181 | ROBINSON | R44 | SEA06LA052 | 2006 | 02/06/2006 00:00:00 | HELENA | MT | USA | 1 | ROBINSON | R44 | ROBINSON R44 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 158 | 20160711X32921 | BELL HELICOPTER TEXTRON CANADA | 407 | ERA16FA248 | 2016 | 07/11/2016 00:00:00 | HICKORY | KY | USA | 1 | BELL | 407 | BELL 407 |

| 159 | 20160804X45514 | SCHWEIZER | 269C 1 | CEN16FA304 | 2016 | 08/03/2016 00:00:00 | JEANERETTE | LA | USA | 1 | SCHWEIZER | 269 | SCHWEIZER 269 |

| 160 | 20160404X74644 | BELL | 206 | ERA16FA144 | 2016 | 04/04/2016 00:00:00 | PIGEON FORGE | TN | USA | 5 | BELL | 206 | BELL 206 |

| 161 | 20160507X31120 | AIRBUS | AS350 | ANC16FA023 | 2016 | 05/06/2016 00:00:00 | SKAGWAY | AK | USA | 1 | AIRBUS | 350 | AIRBUS 350 |

| 162 | 20160612X85856 | ROBINSON HELICOPTER COMPANY | R44 II | CEN16FA215 | 2016 | 06/12/2016 00:00:00 | JONESBORO | AR | USA | 1 | ROBINSON | R44 | ROBINSON R44 |

163 rows × 13 columns

Warning

You need the exact URL shared in the example to access the file. While you could laboriously type it out, feel free to copy and paste it into your notebook.

After you run the cell, you should see a big table output to your notebook. It is a “DataFrame” where pandas has structured the CSV data into rows and columns, just like Excel or other spreadsheet software might. Take a moment to look at the columns and rows in the output, which contain the data we’ll use in our analysis.

Note

On the left-hand side, you’ll see a bolded number incrementing upward from zero that’s not present in our source data file. This is what pandas calls the index. It is a separate column created automatically and used to identify each row. The index is not considered part of the data, but it is used to reference the rows of the DataFrame or Series in advanced operations that are beyond the scope of this class.

A major advantage of Jupyter over spreadsheets is that rather than manipulating the data through a haphazard series of clicks and keypunches, we will be gradually grinding it down using a computer programming script that is transparent and reproducible.

To do more with your DataFrame, we need to store it so it can be reused in subsequent cells. We can do this by saving it in a variable, just as we did in with our number in Chapter 2.

Go back to your latest cell and change it to this. Rerun it.

accident_list = pd.read_csv("https://raw.githubusercontent.com/palewire/first-python-notebook/main/docs/src/_static/ntsb-accidents.csv")

You shouldn’t see anything. That’s a good thing. It means our DataFrame has been saved under the name accident_list, which we can now begin interacting with in the following cells.

We can do this by calling “methods” that pandas makes available to all DataFrames. You may not have known it at the time, but read_csv is one of these methods. There are dozens more that can do all sorts of interesting things. Let’s start with some easy ones that analysts use all the time.

4.2. The head method¶

To preview the first few rows of the dataset, try the head method. Add a new cell, type this in and hit the play button again.

accident_list.head()

| event_id | ntsb_make | ntsb_model | ntsb_number | year | date | city | state | country | total_fatalities | latimes_make | latimes_model | latimes_make_and_model | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 20061222X01838 | BELL | 407 | NYC07FA048 | 2006 | 12/14/2006 00:00:00 | DAGSBORO | DE | USA | 2 | BELL | 407 | BELL 407 |

| 1 | 20060817X01187 | ROBINSON | R22 BETA | LAX06LA257 | 2006 | 08/10/2006 00:00:00 | TUCSON | AZ | USA | 1 | ROBINSON | R22 | ROBINSON R22 |

| 2 | 20060111X00044 | ROBINSON | R44 | MIA06FA039 | 2006 | 01/01/2006 00:00:00 | GRAND RIDGE | FL | USA | 3 | ROBINSON | R44 | ROBINSON R44 |

| 3 | 20060419X00461 | ROBINSON | R44 II | DFW06FA102 | 2006 | 04/13/2006 00:00:00 | FREDERICKSBURG | TX | USA | 2 | ROBINSON | R44 | ROBINSON R44 |

| 4 | 20060208X00181 | ROBINSON | R44 | SEA06LA052 | 2006 | 02/06/2006 00:00:00 | HELENA | MT | USA | 1 | ROBINSON | R44 | ROBINSON R44 |

It serves up the first five rows by default. If you want a different number, submit it as an input.

accident_list.head(1)

| event_id | ntsb_make | ntsb_model | ntsb_number | year | date | city | state | country | total_fatalities | latimes_make | latimes_model | latimes_make_and_model | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 20061222X01838 | BELL | 407 | NYC07FA048 | 2006 | 12/14/2006 00:00:00 | DAGSBORO | DE | USA | 2 | BELL | 407 | BELL 407 |

4.3. The info method¶

To get a look at all of the columns and what type of data they store, add another cell and try the info method. Look carefully at the results and you’ll see we have 163 fatal accidents to review.

accident_list.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 163 entries, 0 to 162

Data columns (total 13 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 event_id 163 non-null object

1 ntsb_make 163 non-null object

2 ntsb_model 163 non-null object

3 ntsb_number 163 non-null object

4 year 163 non-null int64

5 date 163 non-null object

6 city 163 non-null object

7 state 162 non-null object

8 country 163 non-null object

9 total_fatalities 163 non-null int64

10 latimes_make 163 non-null object

11 latimes_model 163 non-null object

12 latimes_make_and_model 163 non-null object

dtypes: int64(2), object(11)

memory usage: 16.7+ KB

Now that you’ve got some data imported, we’re ready to begin our analysis.